What Is “Vibe Coding” and Why Is It Trending?

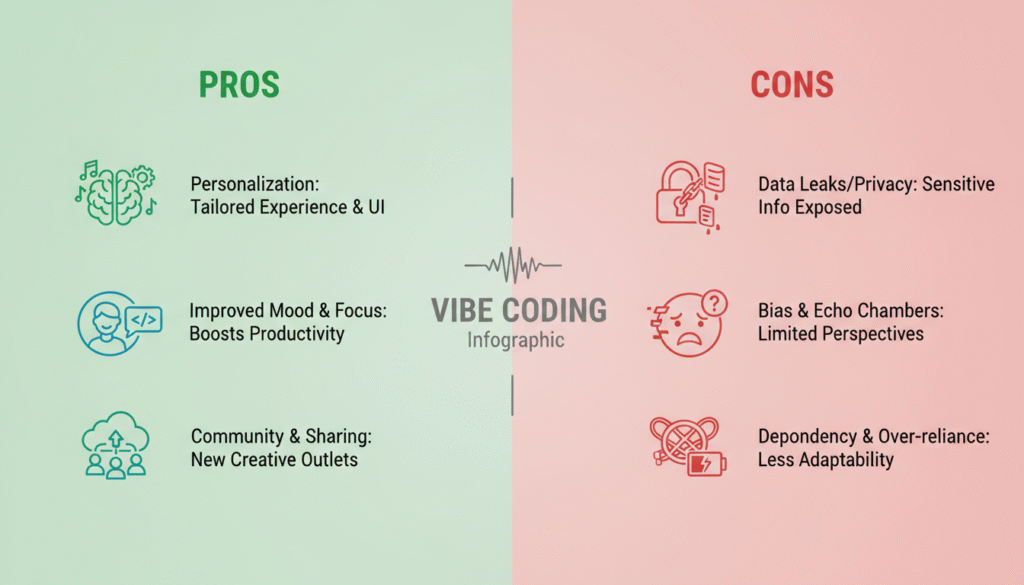

Vibe coding sounds playful, almost harmless. It is a new trend where apps claim to read emotions and design interactions based on your mood. At its core, it is about personalization. Yet behind the fun, there are serious AI-driven personalization risks that many users do not recognize. These apps do not just create songs or visuals. They capture feelings, habits, and patterns that go far beyond ordinary coding practices. That is where vibe coding privacy concerns begin.

Here’s the thing, new apps rise because people trust trends. On platforms like TikTok, influencers praise vibe coding as creative and cool. The social pull is powerful. People download without asking questions. This is how user trust in new coding apps builds rapidly. But trust can turn into trouble when apps collect more than they promise.

The Hidden Privacy Risks Behind Vibe Coding

The truth is, most vibe coding apps take more than what users knowingly share. They track sensitive user data exposure such as moods, reactions, and even private text inputs. This is not just personalization. It is a form of unethical data collection in apps that thrives on lack of transparency.

To make things worse, data rarely stays within one app. When apps link with advertisers, fitness trackers, or music platforms, information spreads. These third-party data sharing risks mean your emotional data may land in places you never expected. Once shared, it cannot be fully controlled.

| Risk Type | Example | Impact |

| Over-collection | Tracking moods every minute | Creates emotional profiles |

| Third-party use | Sharing with advertisers | Targeted manipulation |

| Poor storage | Weak encryption | Risk of hacking |

Real-World Cases of Sensitive Data Leaks

Let’s break it down with real examples. Researchers in 2023 found app data leaks examples where vibe-based platforms accidentally exposed logs of stress levels and private messages. These were visible to outsiders due to weak cybersecurity and app vulnerabilities. The leaked details were not just numbers. They revealed private emotions and moments that users thought were safe.

The consequences of sensitive user data exposure go beyond embarrassment. Businesses face lawsuits, and users face identity theft or emotional harm. For instance, one study reported users quitting apps after discovering leaks of mood-tracking records. For businesses, the costs included lost trust and multi-million-dollar settlements.

Why Users Ignore Privacy Warnings

Most people see a warning screen, but they skip past it. Why? Convenience. The psychology is simple. People crave fun, music, and social approval, so they trade safety for entertainment. That is why psychological manipulation in apps works so well.

Another reason is dark UX patterns. App designers intentionally hide settings, use confusing buttons, or pressure you with pop-ups. Users end up agreeing to share more than they intended. This silent trickery is one of the strongest forces behind vibe coding privacy concerns.

The Role of Developers and Tech Companies

When data leaks occur, the blame does not rest only on users. Developers and companies play a central role. They must accept tech industry accountability for every piece of information collected. If they mishandle emotional data, they are responsible.

This is where ethical app development becomes vital. Developers can design features that protect privacy first. That means encryption, limited data collection, and open policies. Without this approach, vibe coding becomes a playground for abuse rather than creativity.

Legal and Regulatory Challenges

Here’s the problem. Current laws like data privacy regulations (GDPR, CCPA) were not built with vibe coding in mind. These rules cover personal data, but emotional data exists in a gray zone. This creates massive gaps that allow apps to operate without proper oversight.

To close the gap, regulators need new frameworks. They must adapt to the future of AI in app development, where apps read emotions instead of just clicks. International cooperation is also essential, since vibe coding apps spread across borders. Without stronger rules, users remain exposed.

How Users Can Protect Their Data

You do not have to abandon vibe coding altogether. There are simple digital safety tips for app users that reduce risks. Reading privacy settings, avoiding unknown apps, and using two-factor authentication make a difference. These small actions give back some control over data.

Another safeguard is technology itself. VPNs, encrypted messengers, and privacy tools add extra layers of protection. These are not perfect shields, but they limit cybersecurity and app vulnerabilities that cause leaks. Users who combine awareness with tools stand in a stronger position.

The Future of Vibe Coding: Promise vs. Peril

The story is not all negative. There are ways to redesign vibe coding into something safer. Developers can use secure coding best practices, build apps that collect less data, and focus on anonymization. Innovation can exist without exploitation.

The bigger challenge is balance. Can vibe coding remain creative while respecting transparency in data policies and privacy rights? If the answer is yes, the future may hold safe and fun experiences. If not, vibe coding could collapse under the weight of its own risks.

Final Thoughts

Vibe coding feels exciting at first glance, but the risks run deep. Behind the music, art, and play sits a serious threat of emotional AI privacy risks and sensitive user data exposure. The only way forward is responsibility, both from developers and from users who demand safer apps.

FAQs on Vibe Coding Privacy Concerns

1. What are vibe coding privacy concerns?

Vibe coding privacy concerns happen when apps track emotions and collect personal data without clear consent. This often leads to sensitive user data exposure, including mood patterns and chats, creating serious risks for emotional safety and trust.

2. Can vibe coding apps cause emotional AI privacy risks?

Yes. These apps use emotional AI to shape experiences but also store private feelings. Without strict data privacy regulations (GDPR, CCPA), this creates emotional AI privacy risks that can harm users if data leaks or is sold.

3. Why do new apps leak user data?

Leaks usually come from cybersecurity and app vulnerabilities or third-party data sharing risks. Many apps lack secure coding, and once emotional data is shared with advertisers, control is lost. Weak security practices make leaks more likely

4. How can I protect my data on vibe coding apps?

You can reduce risks by adjusting privacy settings, using VPNs, and reading policies carefully. These digital safety tips for app users combined with strong passwords and encrypted messengers limit exposure if apps mishandle your personal data.

5. What is the future of vibe coding apps?

The future of vibe coding depends on ethical app development and secure coding best practices. If developers follow transparency in data policies and users stay cautious, vibe coding may become safer. Without change, the risks may outweigh the fun.

Leave a Reply