Brain-Tech Ethics: When Thought Becomes Interface

When people and machines met in science fiction, it was about brain-computer interfaces. Gadgets can now read brain activity, turn it into commands, and even guess how someone is feeling. This makes us very worried about our mental privacy, freedom of thought, and how safe our neurodata really is. The UN has sounded the alarm, saying that we could lose control of our own minds if we don’t get new neurorights. This isn’t a scene from the future; it’s happening right now with implanted chips and EEG headsets. This revolution is very important, and we need to know what risks and responsibilities we have to deal with as our thoughts become digital.

A Quick Look at Brain-Tech

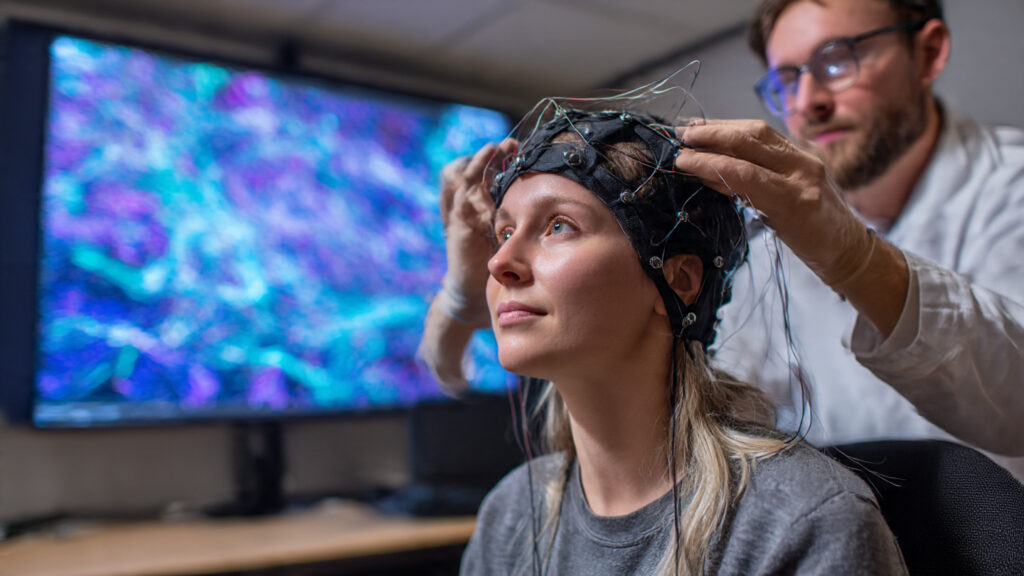

Brain-tech is the name for technologies that link the brain to other systems by either sending or receiving signals. It is the science behind neurostimulators, brain-computer interfaces, and cognitive wearables. Think about how cool it would be to move a cursor with your mind or see how stressed you are by looking at electrical patterns. Now, startups and research labs all over the world are making what used to seem like a dream come true.

But here’s the thing: not all brain technology is the same. Some EEG headsets don’t hurt the brain, but others have to go deep into brain tissue. They both gather neurodata, but the risks, accuracy, and availability of each are different. These groups help us understand how science and morals are not the same.

Wearables, apps, and EEG headsets that don’t hurt you

Sensors on the scalp of non-invasive devices can pick up electrical signals from the brain. EEG headsets and smart wearable devices like Muse or Emotiv can help you keep track of your mood, sleep quality, and level of focus. These devices say they will help you clear your mind, but they also collect sensitive neural data that can show how you really feel.

Medical implants, implanted interfaces, and consumer implants

Implantable BCIs are more precise since they are placed within the skull. They help people who can’t move or talk because of a serious disability. But brain implants can cause infections, steal data, and make people dependent on them for a long time. As companies that make brain implants move from labs to consumers, it’s important to keep an eye on them.

Software and AI that can understand brain signals

The software is the part of this technology that you can’t see. Algorithms can read brain waves, figure out how someone is feeling, and even guess what they want to do. This makes us think about how algorithms can be unfair and how to keep neurodata secret. Who owns the ideas that AI comes up with?

Why This Matters Now: The UN, Neurorights, and Warnings from All Over the World

Both the UN and the OHCHR have said that neurotechnology and freedom of thought are on a collision course. Tools that can see how people think without their permission are putting pressure on the right to keep their thoughts private.

Important UN suggestions and answers from other countries

The UN’s report on neurotechnology says that all countries should see mental integrity as a basic right. Some countries are trying to make laws to protect cognitive freedom, but others are still arguing about what “thought surveillance” means.

What role do UNESCO and UNICEF play in the debate?

UNESCO and UNICEF have set up ethics panels to look at how neurotechnology can be used in schools and to help kids grow. They believe that kids should only be able to use brain-monitoring wearables around the world if there are strict rules about getting permission.

Brain-Tech in Action in the Real World

Brain-computer interfaces are already used in a lot of different areas. Hospitals use EEG headsets that don’t hurt to watch people who have had strokes. Researchers use implants to help people hear or see again.

Use cases in health care and getting better

People with locked-in syndrome can talk to each other through BCIs by moving cursors or typing with their minds. Neural prosthetics, which used to be just an idea, are now saving lives.

Things that are already for sale, like health and wellness products

More and more people are using headsets to help them stay focused and meditate. But there is a chance that the data that is collected could be kept, shared, or sold. Many people want to know if their brain data is safe.

Military, police, and surveillance pilots

Defense agencies are looking into how brain sensors can help soldiers stay focused. But civil rights groups say this could mean that people are being watched at work or by the police.

The most important ethical risks are privacy, consent, and mental health.

The myth of being able to read minds and keep your thoughts to yourself

Even though we don’t have full mind reading yet, people are still arguing about how accurate mind reading devices will be in 2025. Algorithms can figure out what someone is feeling or trying to do with a lot of accuracy. If you use it wrong, you’re invading someone’s mental privacy.

People who are weak, give in, or are forced

People with disabilities are often told to get implants. But people still have to decide to use neural devices. No one should have to become one with a machine to get a job or enter.

Who wins and who loses when there is inequality and access

Rich people get the most benefit because prices are so high, which makes the gap between rich and poor even bigger. If global rules don’t work, neurotechnology could create another digital divide.

Policy Choices and Legal Shortcomings: What Governments Can Do: Laws on mental integrity and neurorights and what they would cover

Chile was the first country to make laws about neurorights, which protect people’s freedom to think what they want. Next are Brazil and Spain. These laws protect mental privacy in the same way that they protect physical privacy by limiting how brain data can be used.

Rules for neurodata, consent, and moving data

Governments decide who owns neural data. The goal is to get people to agree to share their brain data and make it easy for them to move or delete it, just like they would with files on a computer.

Problems with enforcing the law and problems that cross borders

The problem is that the law doesn’t keep up with technology. If countries don’t work together, the UN’s 2025 recommendations on neurotechnology could just be words on a page.

What businesses and countries are doing, how the industry is changing, and what the country plans to do

Keep an eye out for changes in funding, release dates for new products, and big companies and new businesses.

There are a lot of stories about Neuralink’s plans for 2024 and 2025, from Neuralink to Synchron. Startups in Japan, Israel, and Europe are getting closer to each other. Spherical Insights says that by 2030, the global BCI markets will be worth more than $7 billion.

National plans, ideas for moratoriums, and test programs for rules

Several countries are thinking about putting the proposal for non-medical brain implants on hold until ethical frameworks are more fully developed. PMC says that Europe is already making rules to keep people safe when using neurotechnology.

Readers should care about the article because of the immediate personal risks, privacy, and freedom it talks about.

All neural implants and wearables gather neurodata. It can show how tired, stressed, or even politically biased someone is if they don’t have protections.

Civic risks, voting, unfair police work, and power

Democracy is in danger if employers make people wear brain-computer interfaces at work or governments use them to spy on people. It’s not a choice to protect freedom of thought; it’s a necessity.

Things that are good that come from medical advances and real benefits

But “brain-computer interface” can also mean hope. It’s a way for people who can’t move to talk and be free.

Dates, warnings, and signals for what to watch next

Important dates in policy, the UN, and national regulatory calendars

Keep an eye out for more UN news about neurotechnology rights. People who make laws all over the world are paying attention.

New products and company trials are things to watch out for.

By the middle of 2025, you should be able to get new brain headsets for research that don’t hurt. Big tech is spending a lot of money on things, but they aren’t making a big deal out of it.

When looking at any brain device, readers should think about these things:

Find out who has access to your data, how it is stored, and if it can be deleted. These simple things could help keep your mind safe.

Last thoughts and useful advice for staying safe and up to date

For readers, here’s a quick list: privacy, consent, and providers you can trust.

Choose providers who are open about their policies, read their consent forms, and don’t use free services that sell your information. Always keep your software up to date, and never share raw brainwave data.

Links to sources and more reading that have been carefully chosen

Read the UN’s summary of the human rights report on neurotechnology, look at UNESCO’s ethical guidelines, and keep an eye on the list of countries that have passed neurorights laws for news.

FAQs

Q1: What are the moral issues with brain-computer interfaces?

They mostly have to do with consent, mental privacy, and being treated unfairly.

Q2: Does neurotechnology endanger cognitive autonomy?

Yes, it can limit freedom if there aren’t strong neurorights.

Q3: What can you do to keep your brain data safe from being used for bad things?

Use devices you trust, control who can do what, and ask for permission to delete.

Q4: Do devices know what you’re thinking?

They can’t do it directly, but they can figure out what someone is feeling or thinking.

Q5: What is neurodata, and why does it matter?

It’s like a digital fingerprint for your brain. If you lose control, you lose your privacy.

What to do next

Brain technology isn’t just a fad; it’s a civil rights issue. Be aware, be careful, and speak up for neurorights before your thoughts become just another stream of data. BullishTechLab is a reliable source for the most up-to-date information about technology and innovation around the world. BullishTechLab has more detailed information and expert coverage of the moral issues surrounding neurotechnology.

Leave a Reply