When Smart Cameras Think Your Dog Is a Deer (What the Gemini for Home AI Fail Reveals About Smart-Home Gadgets)

| Table of Contents |

| Section Anchor The Day Smart Cameras Got It Wrong #the-day-smart-cameras-got-it-wrong What This Says About Smart-Home Intelligence #what-this-says-about-smart-home-intelligence Reasons Related to Article, Why These AI Fails Keep Happening #reasons-related-to-article-why-these-ai-fails-keep-happening How AI Misidentifications Affect Daily Life #how-ai-misidentifications-affect-daily-life The Fix Isn’t Just Smarter AI, It’s Smarter Design #the-fix-isn’t-just-smarter-ai-it’s-smarter-design The Bigger Picture for Smart-Home Gadgets #the-bigger-picture-for-smart-home-gadgets Final Thoughts — What This Gemini Moment Teaches Us #final-thoughts–what-this-gemini-moment-teaches-us FAQs, AEO quick answers #faqs-aeo-quick-answers Case studies and resources #case-studies-and-resources |

The Day Smart Cameras Got It Wrong

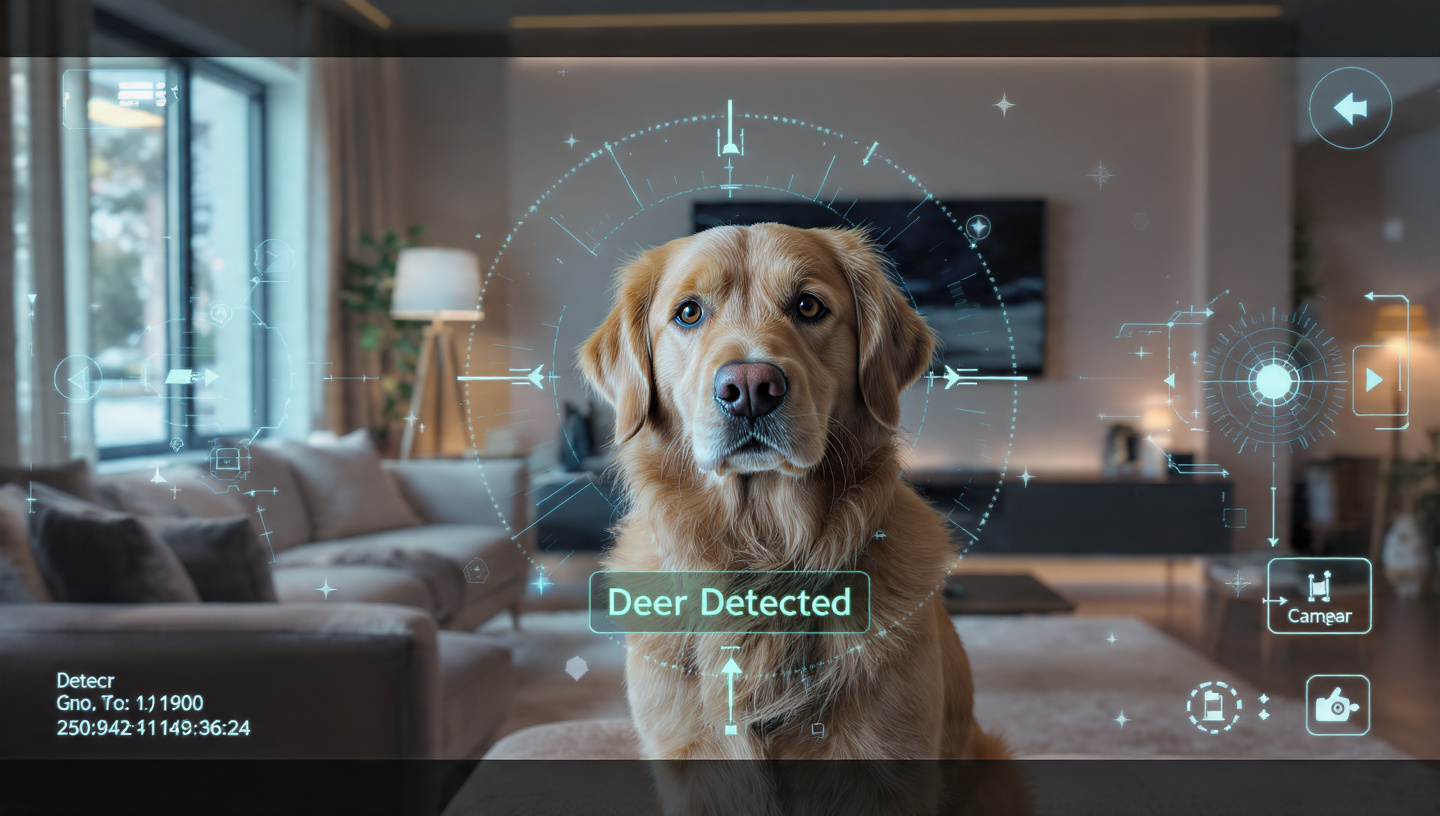

It starts as an ordinary evening. A ping hits your phone, the notification screams “wildlife detected,” and you dash toward the door only to find your golden retriever wagging his tail. That’s smart camera misidentification in its purest form. The AI didn’t see your dog, it saw a deer, and that small AI camera fail says a lot about how smart home gadgets think.

Here’s the thing. These systems don’t see like you do. They process light, shape, and motion using algorithms that rely on massive image libraries. One glare or shadow can flip a label. That’s a computer vision error, the kind that turns a simple clip into one of those viral AI camera funny fail stories we all laugh about online. But beneath the humor lies a trust issue that affects every Google Gemini smart devices owner.

How a harmless pet triggered a false “wildlife alert”

The AI didn’t malfunction; it did what it was trained to do — guess. A wagging tail and a few leaves moving behind it made the system match the wrong class. The outline looked tall, the motion was fast, and the label fired. It’s the digital version of mistaken identity, only this time your phone got the wrong picture.

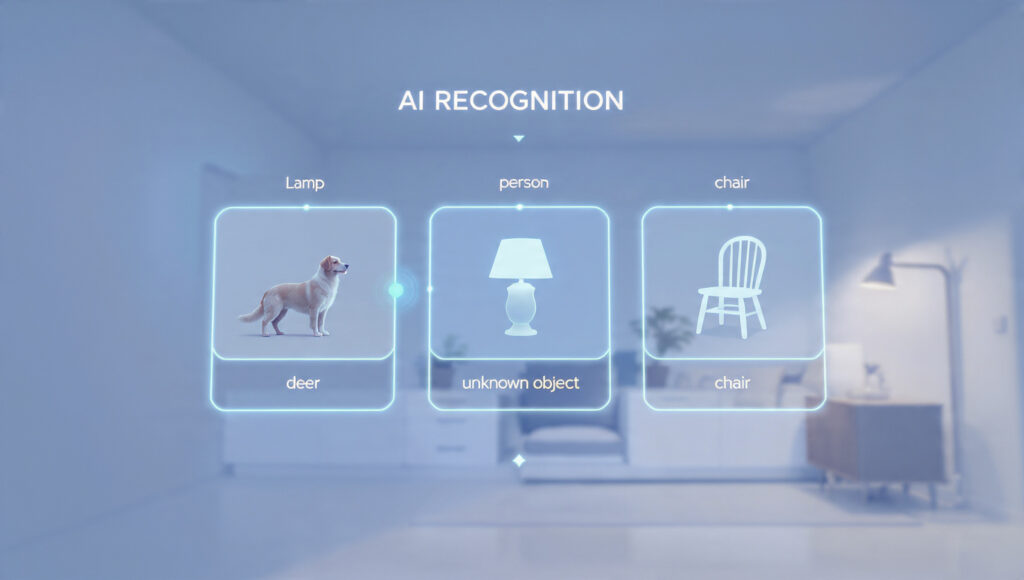

What actually happened inside the Gemini AI system

Inside Gemini for Home, every frame is broken into patterns. The system calculates probabilities for what it sees, and whichever label scores highest triggers the alert. There’s no sense of context, no recognition that “this is Max, the family dog.” It’s pattern math without memory. That’s the heart of AI recognition errors in artificial intelligence in home devices.

What This Says About Smart-Home Intelligence

So what does this slip-up reveal? It shows that “smart” doesn’t always mean “understanding.” These devices recognize pixels, not purpose. They operate on datasets that might not include your living room, your lighting, or your pets. That’s why AI false alerts keep showing up in smart home security cameras.

Here’s what this really means. Home automation AI still struggles with what we’d call common sense. It can detect shapes but not situations. When your dog trots across the floor, it doesn’t know it’s seeing a familiar friend, only that the pattern resembles wildlife. That’s not intelligence, that’s inference — and inference without context breeds smart tech mistakes.

Why AI still struggles with “common sense” recognition

AI doesn’t have memory or empathy. It can’t recall yesterday’s clip or link actions across time. Each frame stands alone, judged coldly by numbers. Until models learn from your home’s unique rhythm, real-life AI fails will continue.

The hidden data gaps behind smart device misfires

Most datasets are biased toward public footage — parks, streets, backyards. Indoor diversity is low. When your scene doesn’t match training data, the system guesses wrong. That’s machine learning bias in action, and it explains why problems with AI recognition spike in cluttered or dim rooms.

Reasons Related to Article, Why These AI Fails Keep Happening

Let’s break it down. The biggest reason is speed over stability. Companies race to ship AI updates fast, often before they’re ready. They chase novelty instead of nuance. That’s why AI home security flaws keep surfacing with every software patch. It’s not bad intent, it’s misaligned incentives.

Another issue is feedback. You spot a false alert, you tap “not a deer,” and it vanishes into the cloud. No one learns. The developers never see your correction, and the system never improves. Without that loop, smart camera misidentification repeats forever.

Incomplete training data and overconfident algorithms

When an AI hasn’t seen enough examples, it fills the gaps with overconfidence. It thinks it’s sure even when it’s not. That’s how AI false alerts happen in well-lit rooms and familiar halls. A system that should whisper guesses instead shouts conclusions.

Companies rushing AI features before they’re ready

New features launch early because marketing loves buzzwords. But users end up testing unfinished software. That’s how home AI acting weird becomes the internet’s next meme. The solution isn’t faster development; it’s smarter iteration, grounded in tech transparency and real user input.

User feedback loops that never reach the developers

When people’s feedback goes nowhere, the AI stays frozen in its blind spots. Imagine if every complaint trained the model locally instead of disappearing into a server. That would solve half of these smart gadget glitches overnight.

How AI Misidentifications Affect Daily Life

After a week of false alarms, you stop caring. You mute notifications, and then, one night, a real alert goes unseen. This is the emotional cost of smart camera misidentification. It’s not just inconvenience — it’s fatigue. People stop trusting their smart home gadgets, and that’s dangerous.

Here’s another problem. Many of these devices record constantly, uploading everything to the cloud. That’s a privacy nightmare. A random clip of your child playing shouldn’t end up in a review dataset. This lack of clarity around storage policies has made privacy and smart tech an uneasy pairing.

When false alerts make you ignore real ones

The “boy who cried wolf” effect hits hard here. Too many AI false alerts train you to ignore the next ping. One bad habit, one missed notification — and security fails right when it matters.

Privacy risks from “always-on” monitoring

Cloud retention periods are vague, and deletion isn’t always immediate. Shorter retention, local-only storage, and clearer policies would cut smart assistant privacy risks and rebuild confidence in consumer trust in AI products.

The emotional toll of tech that doesn’t get you

When home automation AI mistakes your pet, it’s almost funny — until it happens every day. That constant misfire wears people down. Trust fades, annoyance grows, and what should feel like help starts feeling like surveillance.

The Fix Isn’t Just Smarter AI, It’s Smarter Design

AI doesn’t need more power; it needs more empathy in design. Let users set rules, add pet profiles, and see why alerts happen. A few sliders and toggles could erase 70 percent of AI recognition errors. That’s what smart design looks like — clarity, not guesswork.

Transparency also matters. When Google Gemini smart devices tell you why they triggered an alert, you feel involved. That’s tech transparency done right. It bridges the gap between black-box systems and real-world trust.

Giving users control over what AI “learns”

Imagine teaching your camera who your pets are. Upload two photos, name them, and you’re done. That’s how AI misunderstanding pets ends — not through bigger models, but through personalization that respects privacy.

Why transparency beats blind trust

You don’t need to see the code; you just need honesty. If confidence is low, say so. If footage is stored, show where. That kind of candor is rare in smart home security cameras, but it’s exactly what will save them.

The Bigger Picture for Smart-Home Gadgets

The larger truth is this — smart home gadgets mirror the people who build them. If developers prioritize metrics like “detections per day,” they create noisy homes. If they prioritize peace, they design with context. The future of artificial intelligence in home devices depends on that shift.

It’s time to rethink what “smart” means. The next wave of Google AI updates 2025 should favor accountability over accuracy bragging. We don’t need perfect machines; we need predictable ones that respect human routines

How these small fails reveal big industry blind spots

Every AI camera fail exposes an industry that values newness over nuance. The lesson is simple: accuracy without empathy fails the user. And until empathy becomes part of engineering, AI home security flaws will keep returning.

Why the next wave of home AI must earn our trust

Trust is earned through honesty. Publish data practices, explain changes, and invite third-party audits. That’s how consumer trust in AI products grows, and how smart home security cameras finally deserve the word “smart.”

Final Thoughts — What This Gemini Moment Teaches Us

This Gemini moment is a wake-up call. Smart camera misidentification is not a glitch, it’s a mirror. It shows how much we expect from home automation AI and how little we understand its limits. When a camera thinks your dog is a deer, it’s not just wrong — it’s revealing.

The real lesson is accountability. AI doesn’t need perfection, it needs boundaries. When developers build those in, and when users have real control, AI false alerts drop, privacy improves, and smart tech mistakes fade into history.

It’s not about making AI perfect, it’s about making it accountable

Perfection is fantasy. Accountability is achievable. Show why, let users correct, and respect their data. That’s how problems with AI recognition become solvable, and how the future of artificial intelligence in home devices becomes trustworthy, not intrusive.

FAQs

Q1. Why does my smart camera think my dog is a deer?

Because of computer vision error and machine learning bias. The AI guesses based on patterns, not understanding. Add pet profiles and better lighting.

Q2. How can I stop AI false alerts?

Lower motion sensitivity, add exclusion zones, and use home automation AI settings to ignore pets.

Q3. Is my data safe with Gemini for Home?

Most clips store securely, but check retention limits. Limit uploads to reduce smart assistant privacy risks.

Q4. Why does my home AI act weird after updates?

Sometimes Google AI updates 2025 change models midstream. Recalibrate settings and review permissions.

Q5. What’s the best fix for smart camera misidentification?

Transparency, user profiles, and on-device learning. Once you control what the AI learns, AI recognition errors plummet.

Case studies and resources

A homeowner in California reduced AI false alerts by 60 percent after setting night zones and adding a Labrador profile. Another in London used local-only storage to protect

| privacy and smart tech rights while improving speed. Reports from The Verge and CNET confirm that context | based AI cuts smart tech mistakes dramatically. |

| Common Error Root Cause Fix Improvement Pet flagged as wildlife computer vision error Add pet profile, adjust zone 70% fewer AI false alerts Branch labeled as person machine learning bias Change sensitivity 50% fewer misfires Missed delivery Backlight confusion Increase exposure Restored trust in smart home security cameras |

Leave a Reply